GenAI: the illusion of intelligence and sobriety, according to Luc Julia

Atmosphere in the main hall during the opening keynote. The main stage at Devoxx France 2025 welcomed Luc Julia for an unfiltered speech on AI.

The opening conference set the tone: Luc Julia—co-creator of Siri and Chief Scientific Officer at Renault—delivered a captivating and critical speech on artificial intelligence, particularly generative AI. The provocative title of his keynote, "Artificial Intelligence Does Not Exist," set the tone early on. According to him, the very term AI is a source of confusion: these systems, including GPT-type language models, do not "think" and are not intelligent in the human sense of the word. He insisted that there is no magic behind ChatGPT, just statistics on huge volumes of data—which explains the sometimes bizarre responses or"hallucinations"produced by these models.

Luc Julia debunked a number of myths by pointing out that each AI remains specialized in a specific field and that creativity and discernment remain the preserve of humans. I particularly liked his analogy of AI as a simple "toolbox": it is up to us to tame it, train it with reliable data, and use it wisely for well-defined tasks. On the ethical side, he emphasized that the ethics of AI are above all those of its creators— in other words, it is up to us to set the rules of use, just as a hammer can be used to build or to hurt, depending on the user's intention.

Finally, he sent a strong message about digital sobriety: "Generative AI is an ecological aberration," he said, backed up by figures. If we made as many requests to a chatbot as we do to Google, the energy consumption would be exorbitant and unsustainable. As professionals, this reminds us of the need to keep a cool head in the face of media hype: before deploying the latest trendy model, let's make sure it is relevant to our business and efficient. Luc Julia's perspective seemed to me to be a healthy way to approach the rest of the conference with pragmatism.

Regulation and security: CRA, SBOM, and DevSecOps in action

The mid-morning session featured an excellent talk focused on compliance and security, centered on the new European Cyber Resilience Act (CRA). The speakers (a team from Thales) detailed the requirements of this upcoming regulation and its impact on our DevSecOps practices. Simply put, the CRA will impose a series of security-by-design and vulnerability monitoring requirements on digital products throughout their lifecycle.

Slide presented by Thales listing the key requirements of the Cyber Resilience Act: principles of Security by Design and proactive vulnerability management.

This slide sums up the spirit of the CRA: integrating security from the design stage (risk analysis, secure default configuration, access control, data protection, reduction of the attack surface, traceability of sensitive actions, etc.) and implementing exemplary vulnerability management (inventory of dependencies and flaws, regular security tests, immediate correction of vulnerabilities, continuous updates, and vulnerability reporting mechanisms). In other words, gone are the days when security could be dealt with later or as an "option": we will have to be able to demonstrate that our application systematically complies with these best practices.

One specific point discussed was the role of the SBOM (Software Bill of Materials): keeping an up-to-date list of all open source libraries and components used, along with their versions and any CVEs, is becoming essential in order to comply with CRA transparency requirements. In practice, this means integrating dependency analysis and vulnerability monitoring tools into our CI/CD pipelines. The speakers also emphasized automation: given the scale of the controls required, only DevSecOps tools can enable compliance to be scaled.

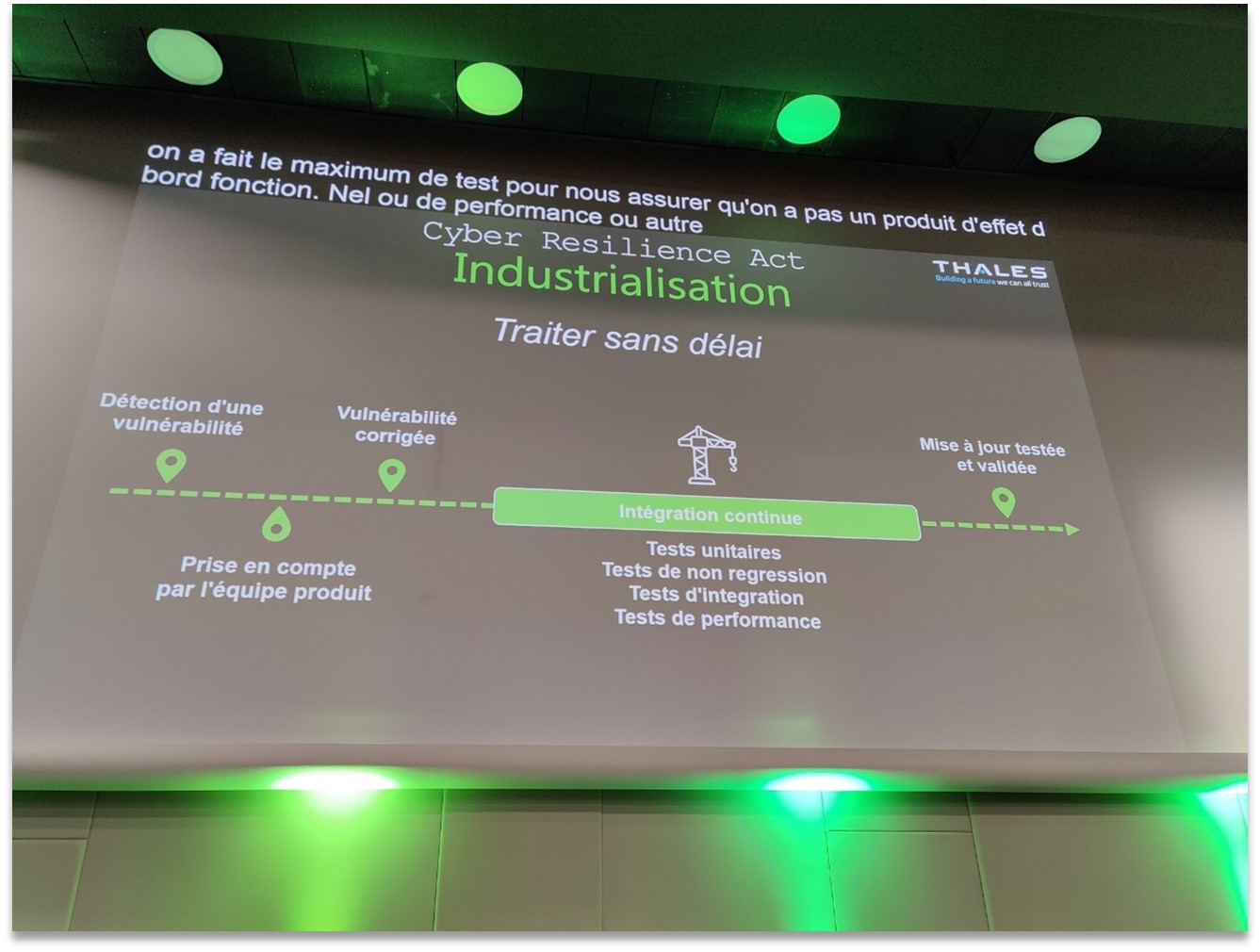

Security industrialization pipeline presented as part of the CRA: as soon as a vulnerability is detected, a fix is integrated and deployed via continuous integration, with a series of tests (unit, non-regression, performance) before going into production.

This diagram illustrates the recommended DevSecOps approach to responding to CRA: the time between discovering a vulnerability and deploying it in production must be minimized. Ideally, as soon as a vulnerability is reported (for example, in a third-party library), the product team takes immediate action, fixes it, and then triggers the CI/CD pipeline to test the update (unit, integration, non-regression, performance tests, etc.) in order to deliver a corrected version without delay. This is a far cry from the traditional patch management cycle, which can take several weeks: real time is now the norm. As a DevSecOps manager, I see this challenge as an opportunity to further accelerate automation (security scans, deployments, compliance report generation) in order to turn a regulatory constraint into a quality advantage. Admittedly, bending over backwards for compliance may seem burdensome, but ultimately, a more secure product that is continuously updated also means fewer incidents and crises to deal with.

AppSec and storytelling pedagogy

Application security also had its moment in the spotlight, in a very original way, with a presentation that broke new ground. Entitled "Once upon a time there was a flaw: 5 AppSec stories and what we can learn from them," Paul Molin's (CISO at Theodo) talk captivated the audience by using storytelling to convey security messages. Rather than listing best practices in a didactic manner, he recounted true stories of vulnerabilities and attacks, much like modern fairy tales, to draw concrete lessons. The approach is refreshing and incredibly effective in making an impression on developers: we remember a striking anecdote much better than yet another OWASP checklist learned by heart.

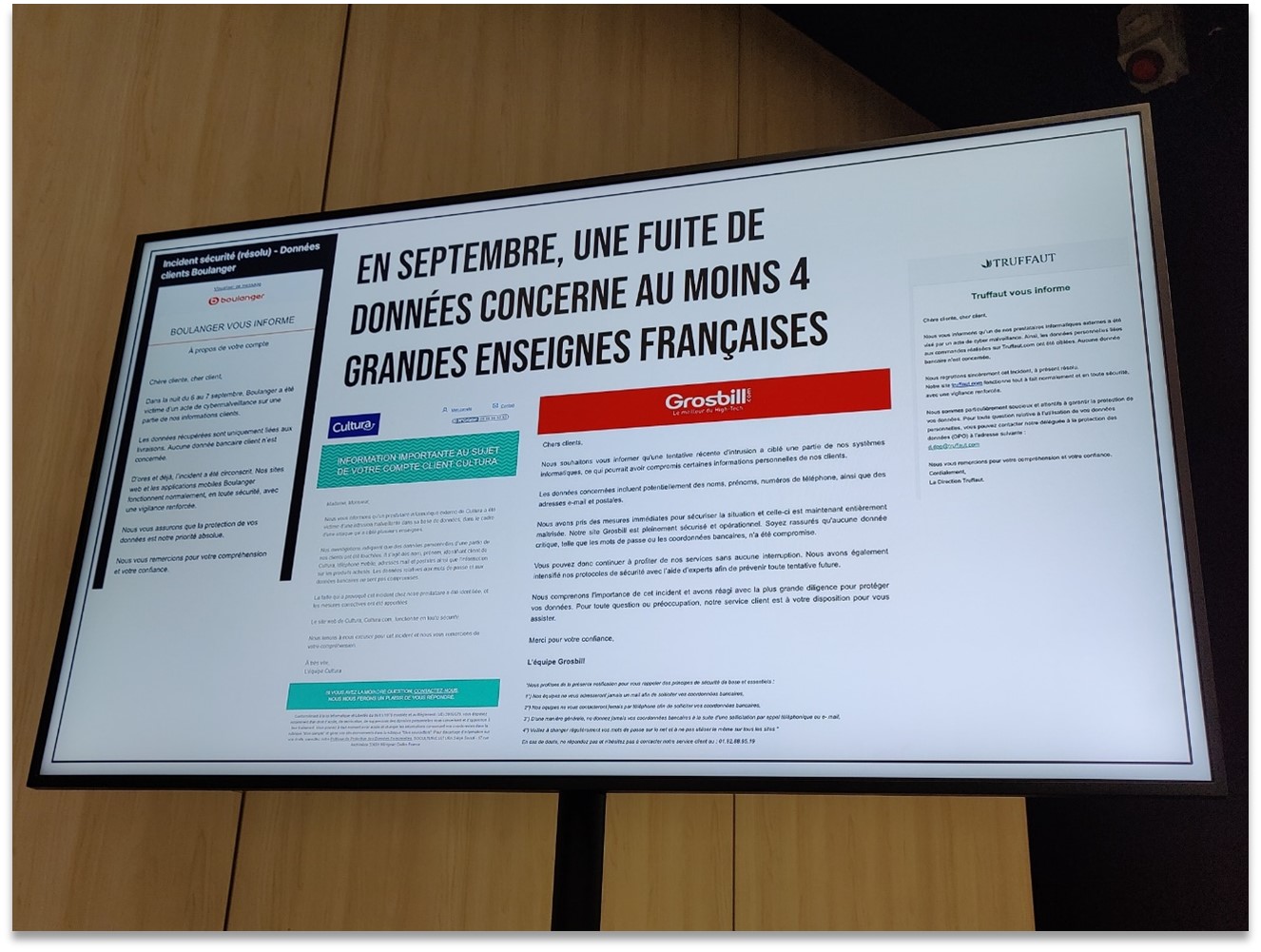

One of the most striking stories involved amassive data leakaffecting several well-known French retailers. Last September, a single attacker compromised the customer databases of several retail brands, exposing millions of records (names, email addresses, phone numbers, etc.). Boulanger, Cultura, Grosbill, Truffaut... none were spared, and each had to rush to send notifications to their customers to admit to the incident.

Example of vulnerabilities presented: in September 2024, customer data from at least four major French retailers (Boulanger, Cultura, Grosbill, Truffaut) was leaked following an attack by the same hacker, exposing these companies' security weaknesses.

This specific case illustrated several points: on the one hand, even big names are not immune, and a motivated attacker can cause widespread damage if common vulnerabilities exist (such as shared suppliers or similar flaws exploited on each site). Second, the way each brand handled crisis communication was dissected, highlighting the importance of transparency with users in the event of an incident. By experiencing this story from the perspective of both the "villain" and the victims, the audience becomes aware of the real impacts of a security breach, far beyond theory.

The other stories covered a variety of topics (from unusual minor front-end flaws to sophisticated attacks on software supply chains), each with a moral or lesson for developers and architects. I loved the approach of making security fun and narrative. It's an educational approach worth remembering: why not incorporate these kinds of stories into our internal training or security awareness programs? Storytelling, when used well, can transform security into something concrete and memorable rather than something perceived as an abstract constraint.

During the lunch break, I attended a mini-talk on a more unusual security topic: port knocking. Behind this intriguing name lies an ancient, almost esoteric technique for strengthening server access. The principle is that the server only opens the port (e.g., SSH) after a specific sequence of "knocks" (connections to a series of ports in an agreed order). Paul Jeannot gave us an overview of this method entitled "Port Knocking: the 'Open Sesame' of SSH." It was an opportunity to review this security mechanism, well known to old-school hackers, and to discuss its current relevance.

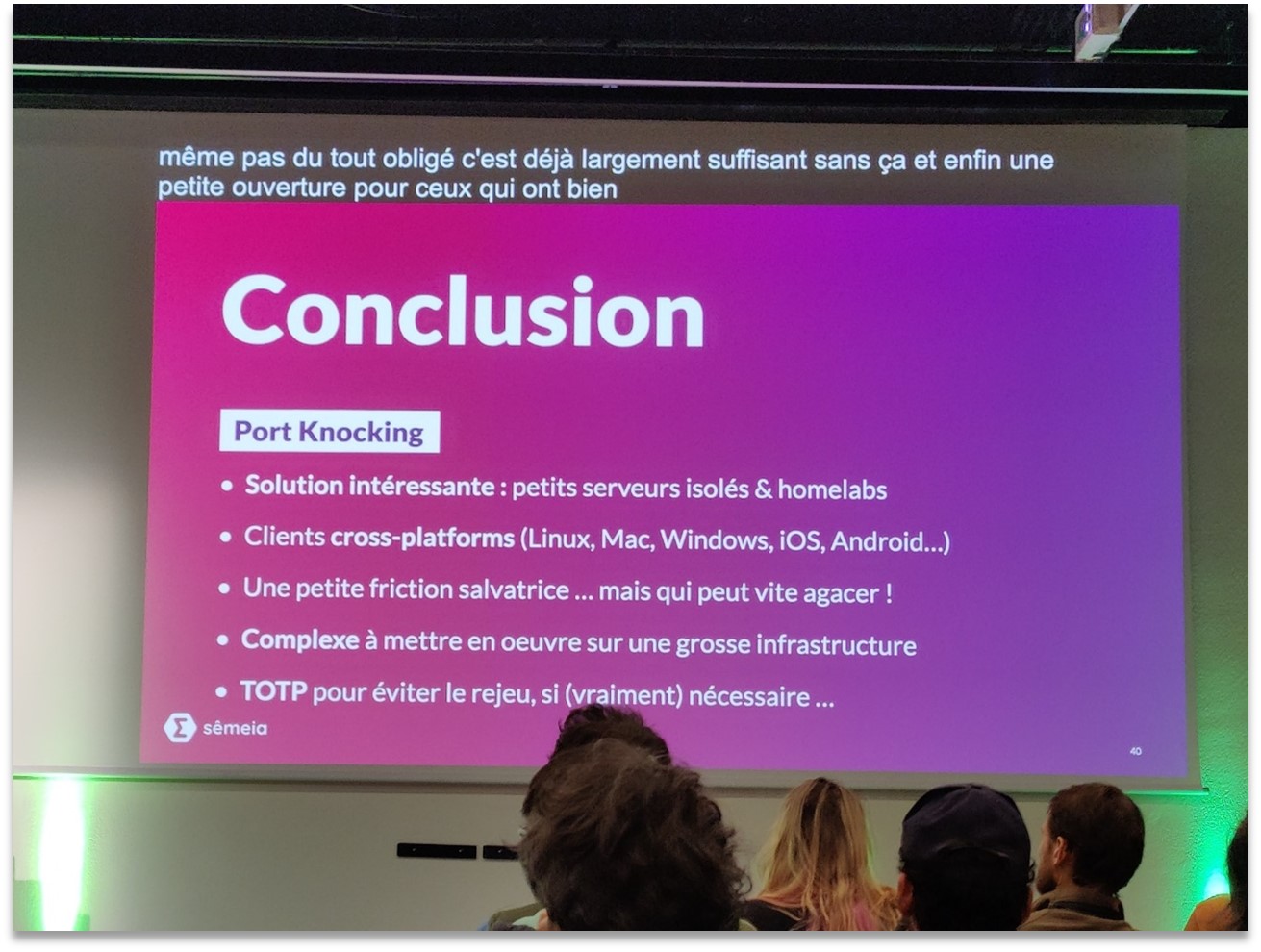

Concluding slide from the talk on Port Knocking – In summary: an interesting solution, especially for small isolated servers or home labs, with clients available on all platforms. It adds an extra layer of friction that can prevent opportunistic attacks, but is difficult to deploy on a large scale in a corporate environment. If used, provide an OTP mechanism to prevent sequence replay.

The conclusion is clear: port knocking can provide an additional layer of security (a "saving friction" that stops the most basic bots), but it quickly becomes annoying and complex on a large infrastructure. It is best to reserve this trick for very specific cases (personal servers, labs) and not overuse it in production. I appreciated that the speaker mentioned adding a one-time password (TOTP) to strengthen the process if you really decide to implement it—proof that this old recipe can be modernized a little. All in all, a light but informative topic, reminding us that in security there are no miracle solutions: each measure has its limits and its context of application.

Kubernetes, IaC, snapshots... and distributed observability

It's impossible to talk about Devoxx without mentioning Infrastructure and Cloud Native. The afternoon offered several sessions on Kubernetes, Infrastructure as Code, and observability, which I'm grouping together here because they collectively outline the contours of the modern DevOps/Cloud ecosystem.

First, we were given an overview of Kubernetes in 2025 (notably by Alain Regnier during a conference in the main hall). What I took away from this is that Kubernetes has established itself as a de facto standard—to the point that we almost forget it's there, as it has become so commonplace to have clusters for everything and anything. The question is no longer "Should we adopt K8s?" but "How can we make it invisible to developers and manageable for Ops?" We talked about the rise of managed offerings (so you no longer have to worry about the control plane), the growing importance of cost optimization (stopping over-provisioning clusters or launching 1,000 pods when 100 are enough), and the trend toward hybrid multi-cloud or edge architectures that complicate matters. Clearly, Kubernetes is no longer the hype of the moment; it is a mature tool that we are now seeking to refine (simplify its use, improve its intrinsic security, etc.).

One topic in particular caught my attention: data management and backups in Kubernetes. During a technical talk, I rediscovered (with a demonstration) Kubernetes VolumeSnapshots. For those who are unfamiliar with them, this feature allows you to capture a snapshot of a persistent volume (PersistentVolumeClaim)—a bit like a hot backup of your attached disk—directly via the Kubernetes API.

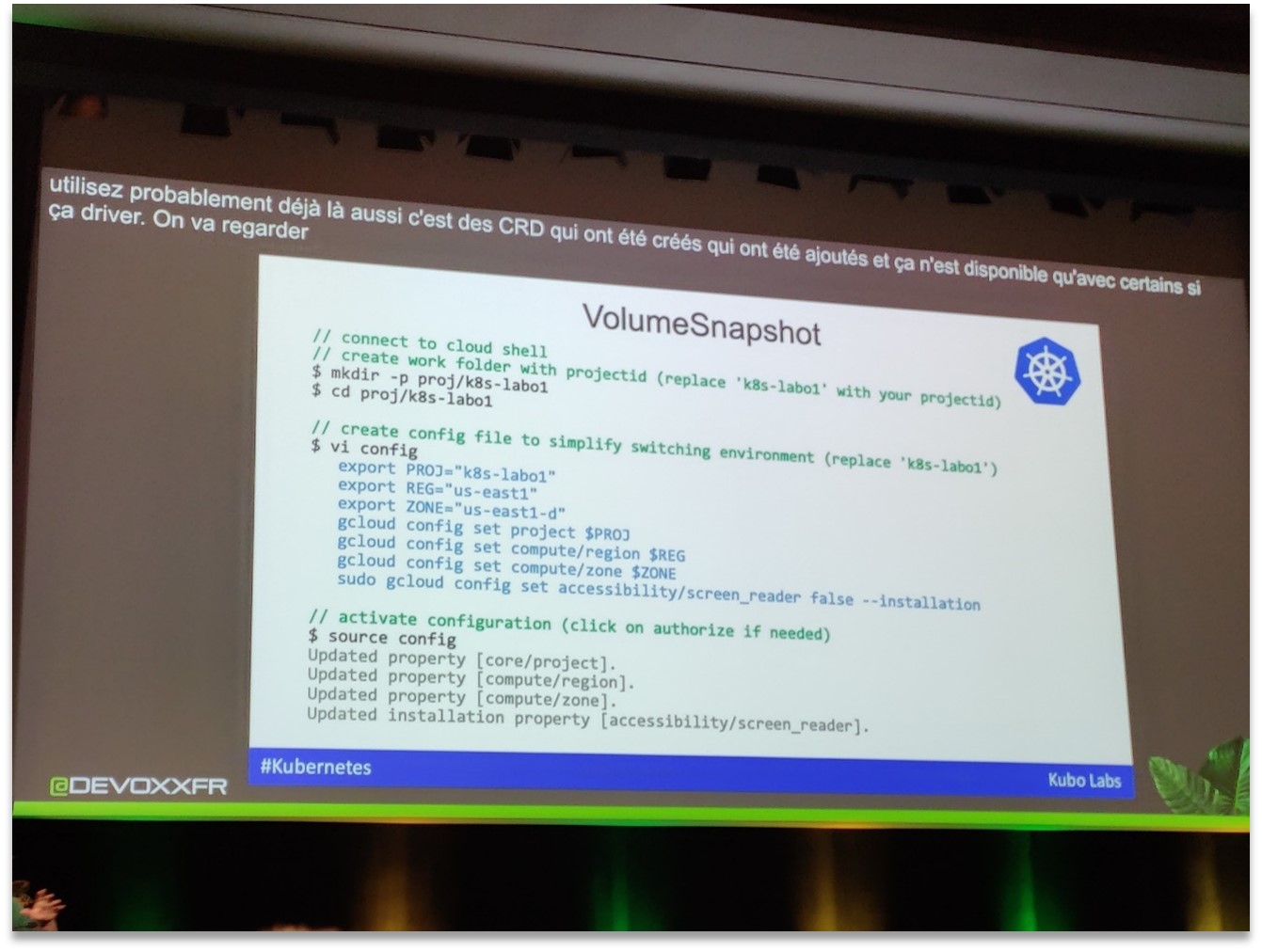

Kubernetes demo excerpt: creating a volume snapshot via the CLI. We see the configuration of the project, the zone, and the use of a CustomResourceDefinition (CRD) "VolumeSnapshot" to trigger the copying of data. This snapshot can then be restored if necessary.

The demo, performed on Google Cloud (GKE), showed the commands for configuring the project and region, then creating a Kubernetes VolumeSnapshot resource pointing to a persistent disk. This highlighted that not everything is native in Kubernetes: snapshot support requires the underlying storage provider to implement it (here via GCP's CSI drivers). In the background, this feature introduces new objects (CRDs) into the cluster. As an infrastructure/code enthusiast, seeing this kind of mechanism in action is fascinating: we treat the infrastructure (in this case, volume backup) "as Code, " triggered by simple YAML objects in the cluster, which paves the way for backup/restore automation, data portability between environments, and more. It's the kind of detail that reminds us that even once the Kubernetes platform is in place, there are still complex technical challenges to managing the entire ecosystem (storage, network, etc.) via IaC.

Let's talk about Infrastructure as Code (IaC): it was present in almost all infrastructure sessions. Whether describing Kubernetes deployments, orchestrating the cloud with Terraform, or even coding security policies, we can see that the declarative approach is everywhere. A striking example from the afternoon was the importance of GitOps and ultra-simplified deployment. A tool like Kamal (presented in Tool-in-Action) promises to deploy an application in "10 lines of configuration"—a sign that the community is seeking to further abstract the complexity of Kubernetes to get back to basics (deploying code). For my part, I have noticed in our projects that IaC has become indispensable for reproducibility and traceability: it is no longer a question of creating a resource manually via a web console without having its equivalent in Git. This movement towards total IaC therefore continues to accelerate, with solutions competing in ingenuity to make it accessible.

Another major technical topic of the day was distributed observability. A pair of speakers (Alexandre Moray and Florian Meuleman) presented the key tools for surviving when production crashes —quite a program! The main idea was to prepare developers (not just Ops) to deal with incidents in complex systems. We often have microservices everywhere, requests that pass through N files, M databases... When things go wrong, you need to be able to trace the execution thread and understand where the problem lies. The talk highlighted the useof OpenTelemetry to instrument your code from the outset, in a standard way, in order to collect metrics and traces that can be used later. They also promoted open source solutions such as SigNoz, an observability platform that aggregates and visualizes logs/traces/metrics (a homemade alternative to Datadog & co). I learned that by combining OpenTelemetry (for unified collection) and a tool like SigNoz (for analysis), you get a 100% open source ecosystem that you can host yourself to monitor your apps. For us DevSecOps practitioners, this is good news: we can equip our dev teams with accessible observability solutions without necessarily blowing the budget on proprietary APM, and above all by integrating them natively into the dev cycle. In short, the message was "failures will happen sooner or later, so be prepared: put sensors everywhere, set up useful dashboards, and train yourself to read these signals." This is an important reminder not to consider observability as a luxury, but as an essential component of the infrastructure from the design stage onwards.

Open source and smart operators: the example of Burrito

At the end of the day, another topic caught my attention: the power of open source to improve our DevSecOps pipelines, illustrated by a tool with a surprising name— Burrito. Behind this playful name lies an open source Kubernetes Operator developed by Theodo, aimed at simplifying Terraform infrastructure automation. The speakers (Lucas Marques and Luca Corrieri) presented it as a "TACoS"—for Terraform Automation & Collaboration Software. In short, Burrito is positioned as a free alternative to solutions such as Terraform Cloud/Enterprise: it allows you to launch plans and apply Terraform in an automated manner, triggered by events (merge of pull requests, push to a branch, etc.), with status management, access management, and even drift detection ( drift between the coded infrastructure and the actual infrastructure).

I was impressed to see how Burrito integrates natively into Kubernetes—since it works as an operator in the cluster. Specifically, it monitors Custom Resources representing your Terraform stacks and manages the Terraform containers that will apply the changes in the background. So we stay in the GitOps spirit: as soon as I modify the description of my infrastructure in Git, the operator will orchestrate Terraform to reconcile the actual state. All this without leaving my Kubernetes cluster, and with the ability to trace everything in the cluster logs. It's quite elegant and avoids dependence on an external SaaS service.

Beyond Burrito itself, this talk highlights a fundamental trend: intelligence in Kubernetes operators. We started using operators for databases, brokers, etc. – now we are extending them to DevOps use cases. They are becoming true orchestrators capable of driving other tools (in this case, Terraform) while benefiting from version control, K8s permissions, and cluster resilience. For those of us who do DevSecOps, this is a godsend: we can seamlessly combine the world of platforms (K8s) with that of infrastructure-as-code. And the fact that it's open source means we can adapt it, audit it, contribute to it... In short, it's a great example of a useful community project, born out of a real need (how to improve our IaC pipelines) and made available to everyone.

I left the Burrito session with lots of ideas in my head: why not try this approach on our own staging environments? If it lives up to its promises, it could simplify life for our infrastructure teams by offering them an internal Terraform-as-a-Service solution, controlled from start to finish.

DEVOXX 2025: Between clarity and technical pragmatism

The first day of Devoxx France 2025 was intense, covering a wide range of topics from strategic vision (AI, regulation) to practical everyday tools (Kubernetes, Terraform, application security). The common thread that I see as a DevSecOps practitioner is the importance of cross-functionality: successfully connecting the dots between these worlds. For example, integrating AI into our products in a reasoned manner while respecting security and ethical constraints. Or complying with new laws (CRA) without compromising agility, thanks to automation. It also means raising developers' awareness of security through new methods such as storytelling, while providing them with robust technology platforms (K8s, tooled IaC pipelines) and innovative open source tools. In just one day, I was able to gather a wealth of feedback and best practices that I can't wait to share with my colleagues and teams. Devoxx France proves once again that we leave with the enthusiasm to try out lots of new things. I can't wait for the next sessions and discussions to come!