Generative AI: technological maturity driving impact

AWS 2025 statistics on AI adoption in France (90% of companies report an increase in revenue, 68% of startups use GenAI).

The tone was set right from the opening plenary session: generative AI is now widely adopted by French companies. A study presented during the keynote address indicates that 90% of companies that have deployed generative AI have seen an increase in revenue, while 68% of French startups already use GenAI in their products or services. These rapidly growing figures (+26% year-on-year) reflect the rise of GenAI in all sectors of activity.

In concrete terms, AWS has highlighted several customer testimonials illustrating this maturity. For example, aerospace giant Safran has testified to its use of Amazon Bedrock for technical document analysis and predictive maintenance, resulting in savings of €1 million in 18 months. Safran has also announced a strengthened partnership with AWS to deploy generative AI on a large scale, with ambitious goals such as decarbonizing aviation and optimizing the supply chain. Another telling example: Veolia, in partnership with French startup Mistral AI, demonstrated how AI helps detect industrial failures, classify documents, and prepare future professional voice assistants. Whether it's Owkin (healthcare), Qonto (fintech), or Poolside (developer tool), all of these players have demonstrated real-world use cases in production, far beyond simple POCs: GenAI has become a concrete operational asset, from supporting maintenance technicians to fighting bank fraud. AI enables them to improve the customer experience, optimize their internal operations, and generate new revenue. After the era of simple chatbots in 2024, it is clear that 2025 is the year of AI agents and high-value-added generative solutions in production, with concrete ROI and advanced integration into existing information systems.

Finally, it would be impossible not to mention Mistral AI—the flagship of French Tech in LLM—which took advantage of the Summit to announce the availability of its in-house Pixtral Large model on Amazon Bedrock. It is the first European model of its kind offered in serverless mode, fully managed on AWS. For the French tech community, this is a strong signal: our local innovations are finding their place in the global cloud ecosystem. As an expert, I am pleased to see AWS embracing this diversity of models and approaches: it opens the door to more strategic choices for our own projects (depending on the case, we can opt for an AWS, open source, local, or other model, without integration friction).

The rise of multi-agent systems: towards collaborative AI

Panel discussion: “From AI assistant to AI creator” with Dust, Anthropic, and Balderton on AI agents and MAS.

As generative AI reaches a certain level of maturity, a new frontier is already opening up: that of multi-agent systems (MAS). Rather than relying on a single conversational agent, the idea is to orchestrate several intelligent agents that collaborate to accomplish complex tasks. This theme, which echoes recent experiments by AutoGPT and others, was prominently featured at the Summit.

During a panel discussion entitled "From AI Assistant to AI Creator: The Agent Revolution," experts discussed this upcoming revolution. Alongside AWS, the panel included leading figures in the field such as Guillaume Princen (Head of EMEA at Anthropic), Gabriel Hubert (CEO of Dust), and Zoé Mohl (Principal at Balderton Capital). The panel explored the evolution of conversational AI towards more autonomous and proactive agents, capable not only of answering questions, but also of taking initiative, calling other services, and even collaborating with each other. The speakers emphasized that a single AI agent is no longer sufficient to tackle complex tasks: it is often preferable to orchestrate several specialized agents (known as Multi-Agent Systems or MAS), each of which is an expert in a particular field, and have them interact to achieve a common goal. They all share a vision of a near future where swarms of specialized agents cooperate to solve problems, much like human teams or software microservices. In discussions with my peers, I have noticed that this idea is particularly appealing to R&D profiles—including at Squad, where our work is already exploring multi-agent architectures. It is exciting to see our intuitions confirmed by market trends.

A concrete example of this multi-agent approach was provided by Qonto, the French neo-bank, which made a big splash by revealing that it had deployed no fewer than 200 AI agents in production to detect fraud and automate financial tasks for its PayLater (deferred payment) service for its business customers, preventing €3 million in fraud. This figure is staggering and proves that we are no longer in the lab experimentation stage: by coordinating hundreds of agents (some monitoring transactions, others managing compliance controls, etc.), Qonto has achieved an unprecedented level of automation and vigilance in its IT system.

Rather than relying on a single monolithic model, Qonto has orchestrated three complementary types of agents to handle each request:

- Vision agents – They use Anthropic's Claude 3.7 LLM (via Amazon Bedrock) to read and extract information from invoices provided by customers (amount, date, supplier, etc.).

- “Research” agents – They query external sources and public APIs (Google via Serper, INPI business registry, etc.) to verify information about the buyer and seller (company existence, solvency, potential risks), based on data extracted by the Vision Agent.

- Analysis Agents – They execute business validation code and cross-reference data (e.g., check payment histories, AML/KYC regulatory compliance) to decide whether or not the transaction can be approved.

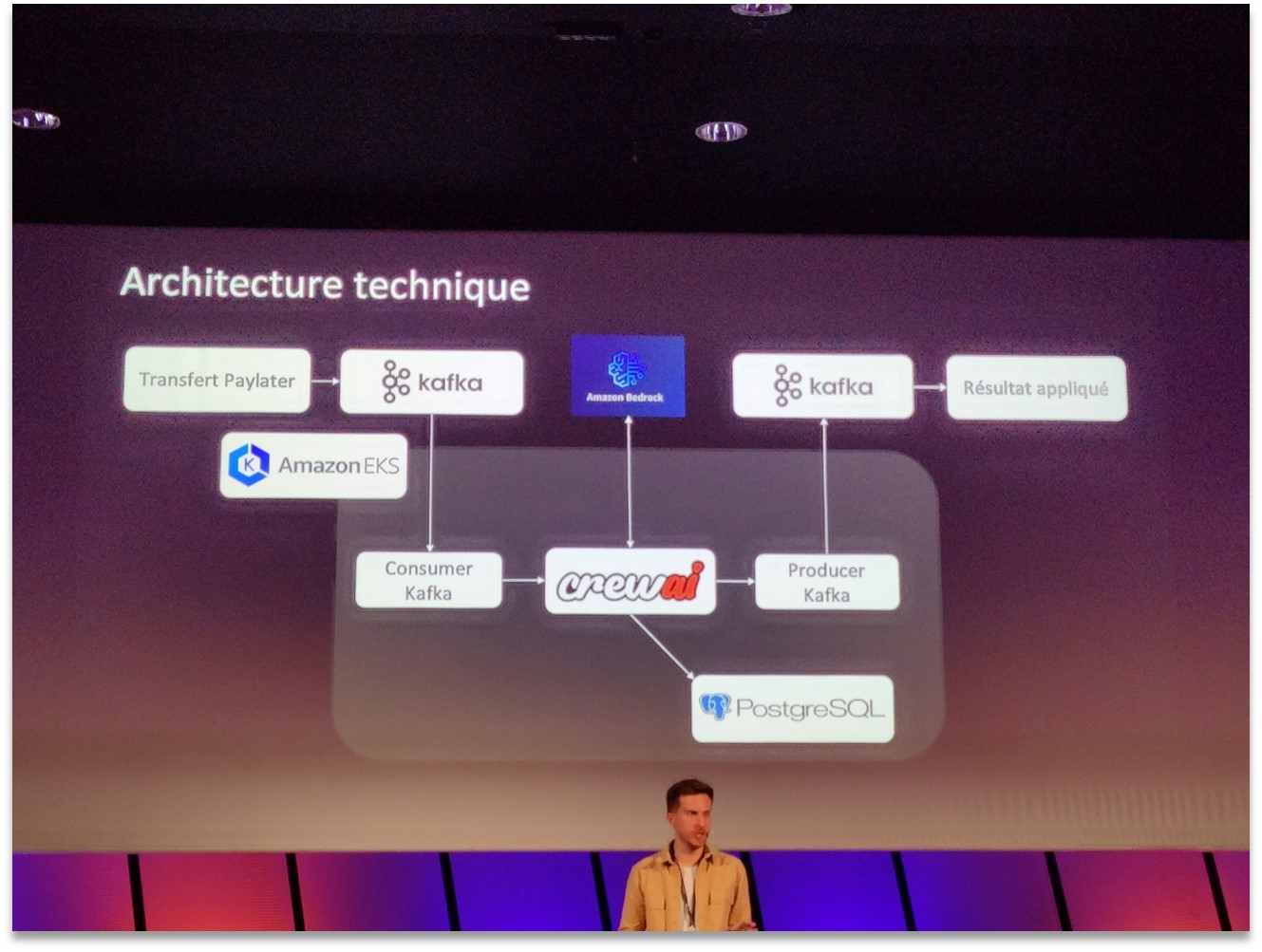

CrewAI technical architecture at Qonto for PayLater processing with Kafka, PostgreSQL, EKS, and Amazon Bedrock.

The architecture presented by Qonto (illustrated above) relies on the open-source CrewAI framework to orchestrate these agents within containers on Amazon EKS. Funding request events ("PayLater Transfer") are published in Kafka and consumed by the CrewAI service that controls the agents, which can store and read data in a PostgreSQL database and call on Amazon Bedrock models. Once the agents have completed their analysis, the result (approval or rejection of funding) is produced in Kafka and then applied in the transaction system. This multi-agent system has enabled Qonto to reduce the processing time for a request from 6 hours to less than 5 minutes, automate 25% of cases upon deployment (with a target of 80% in the near future), and thus scale up without having to hire large numbers of staff for manual checks. For DevOps teams, this example demonstrates that with the right architecture, GenAI can be reliably integrated into critical business workflows while remaining scalable and maintainable.

For its part, cement giant Holcim has demonstrated how generative AI-powered agents can automate previously manual business processes: for example, invoice processing has been dramatically accelerated (90% reduction in manual processing thanks to AI). Even in agriculture, a player such as Syngenta is using agent collaboration via Bedrock to optimize agricultural yields, with a 5% increase in productivity for certain crops—a huge gain on the scale of this sector.

The problem with AI "super agents": when a single agent manages too many use cases, it becomes slow, costly, and inaccurate. Hence the appeal of MAS.

More generally, discussions at the Summit warned against the myth of the "super agent" capable of doing everything. A telling slide showed that by trying to entrust too many responsibilities to a single agent (for example, managing an entire e-commerce process, from order taking to after-sales service), you end up with an agent that is slow, costly in terms of resources, and often inaccurate. Conversely, by adopting a modular multi-agent approach, the problem can be broken down into subtasks, each agent can be optimized for its specific task, and a more efficient overall solution can be achieved. This architectural principle, reminiscent of microservices, is becoming established as a best practice for advanced AI applications.

However, this also poses new engineering challenges—how can we supervise and debug a constellation of agents? How can we prevent an agent from becoming malicious or ineffective? We will closely monitor feedback from companies such as Qonto to identify best practices.

LLM security and governance: essential safeguards

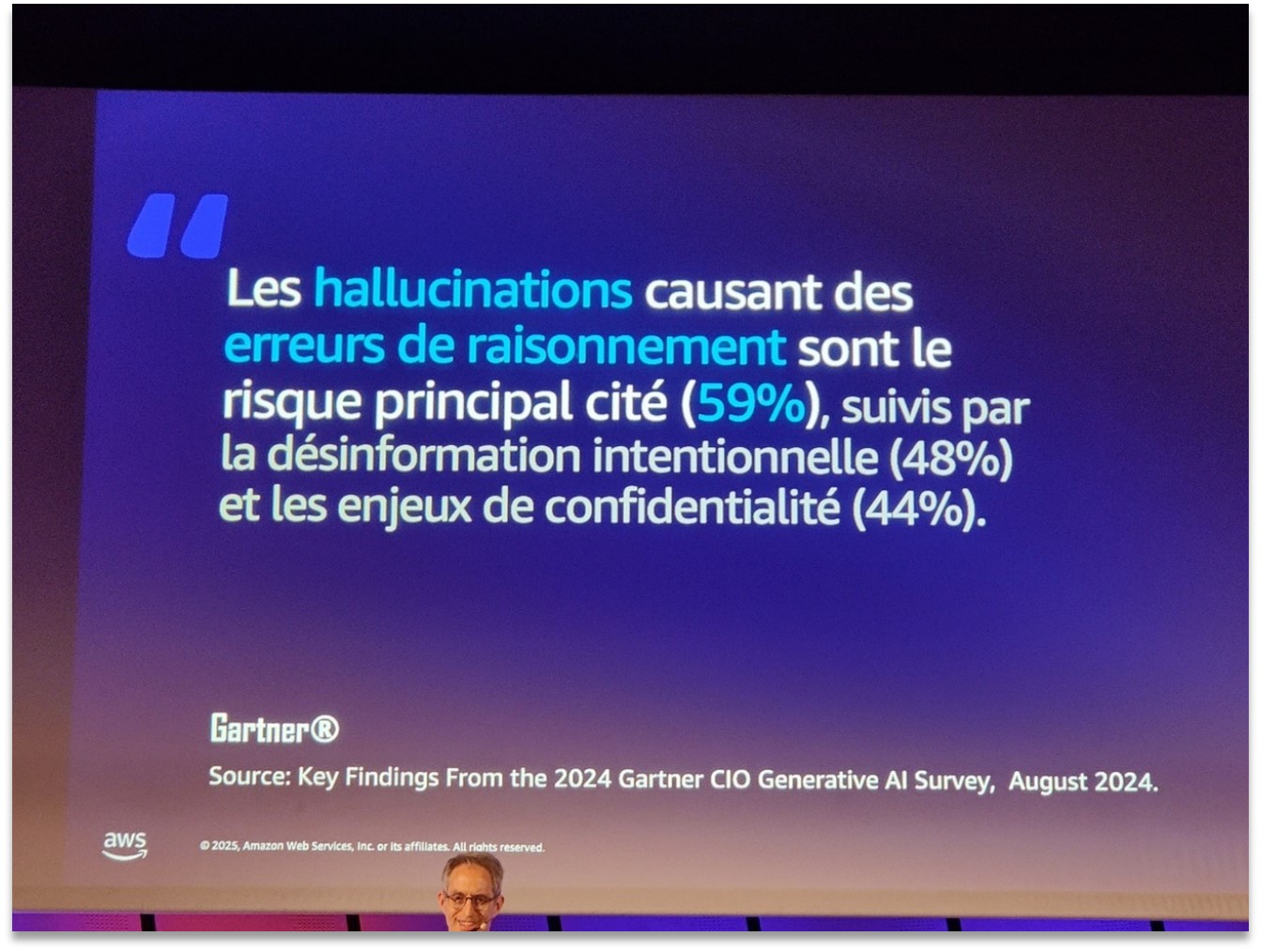

Gartner 2024 study results: 59% of CIOs believe that LLM hallucinations are the main risk, ahead of misinformation and confidentiality.

With the widespread adoption of GenAI, the security and reliability of these systems have become major concerns. According to a Gartner study cited at the Summit, the main concern of CIOs regarding LLMs is the problem of hallucinations (59% of them cite this as the number one risk)—that is, responses invented by the model that can cause decision-making errors. This is followed by the risks of intentional misinformation (48%) and data confidentiality (44%). Aware of these issues, AWS dedicated several sessions to best practices for securing generative AI applications.

The three pillars of GenAI security according to AWS: securing AI apps, using agents for cybersecurity, and protecting against new AI threats.

An informative presentation introduced AWS's "three pillars of generative AI security," represented by a three-legged stool (see above). These three pillars are:

- Securing generative AI applications – ensuring that our chatbots, agents, and other LLM-based applications do not become vectors for attacks.

- Using generative AI to secure the environment – leveraging AI models as defensive tools in cybersecurity.

- Protecting against emerging threats related to generative AI – anticipating and countering new types of attacks made possible by AI (deepfakes, impersonation via synthetic voice, etc.).

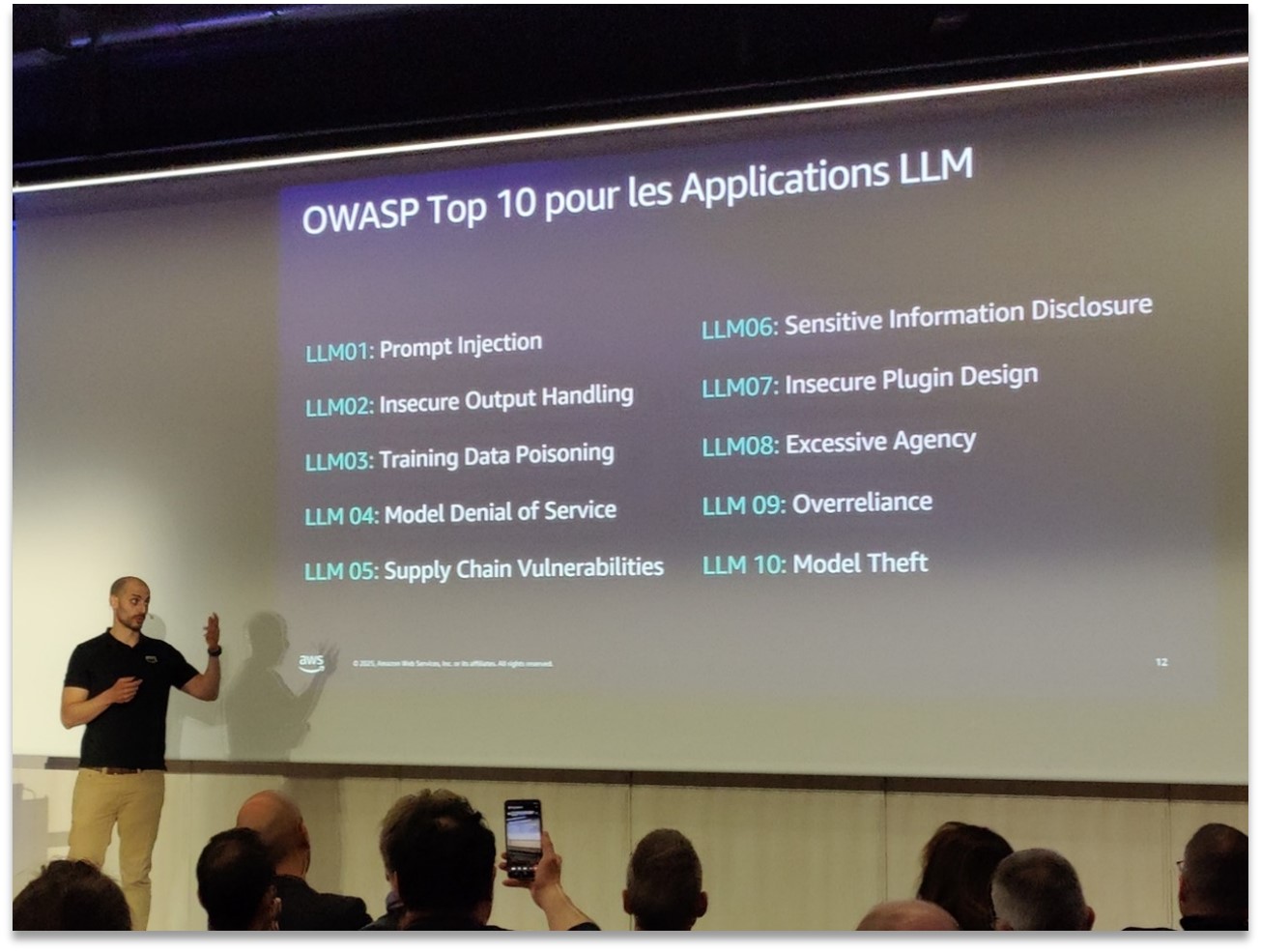

The first pillar concerns protecting our applications against vulnerabilities specific to LLMs. Examples include prompt injection (a malicious user manipulating the model's instructions), the leakage of sensitive information in AI responses, and model theft (exfiltration of the weights of a trained model). On this subject, the speakers mentioned that OWASP is working on a Top 10 list of risks specific to LLM applications, similar to the classic Top 10 for web applications.

OWASP Top 10 for LLM applications, with warnings about prompt injection, data leakage, model theft, etc.

Among these listed LLM risks (see illustration above) are: LLM01: Prompt Injection, LLM02: Poor Management of Model Outputs, LLM03: Training Data Poisoning, LLM06: Sensitive Information Leaks, LLM09: Overconfidence in the Model, and LLM10: Model Theft. As DevSecOps, it is crucial to integrate safeguards (GuardRails) against these vulnerabilities from the design stage: validation and filtering of LLM inputs/outputs, management of the lifecycle of secrets and API keys used by the LLM, monitoring of calls to detect possible abuse, etc. The message is clear: a GenAI application must be secured with the same rigor as a critical web application, adapting our tools and reflexes to the specificities of language models.

The second pillar highlights how AI can be an ally in cybersecurity. One notable use case presented was that of an AI security agent capable of answering questions about known vulnerabilities and assisting SOC analysts. By combining an LLM with security knowledge bases, incident analysis and responses to developers about vulnerabilities can be accelerated. AWS also demonstrated how to quickly build such an assistant using Amazon Bedrock.

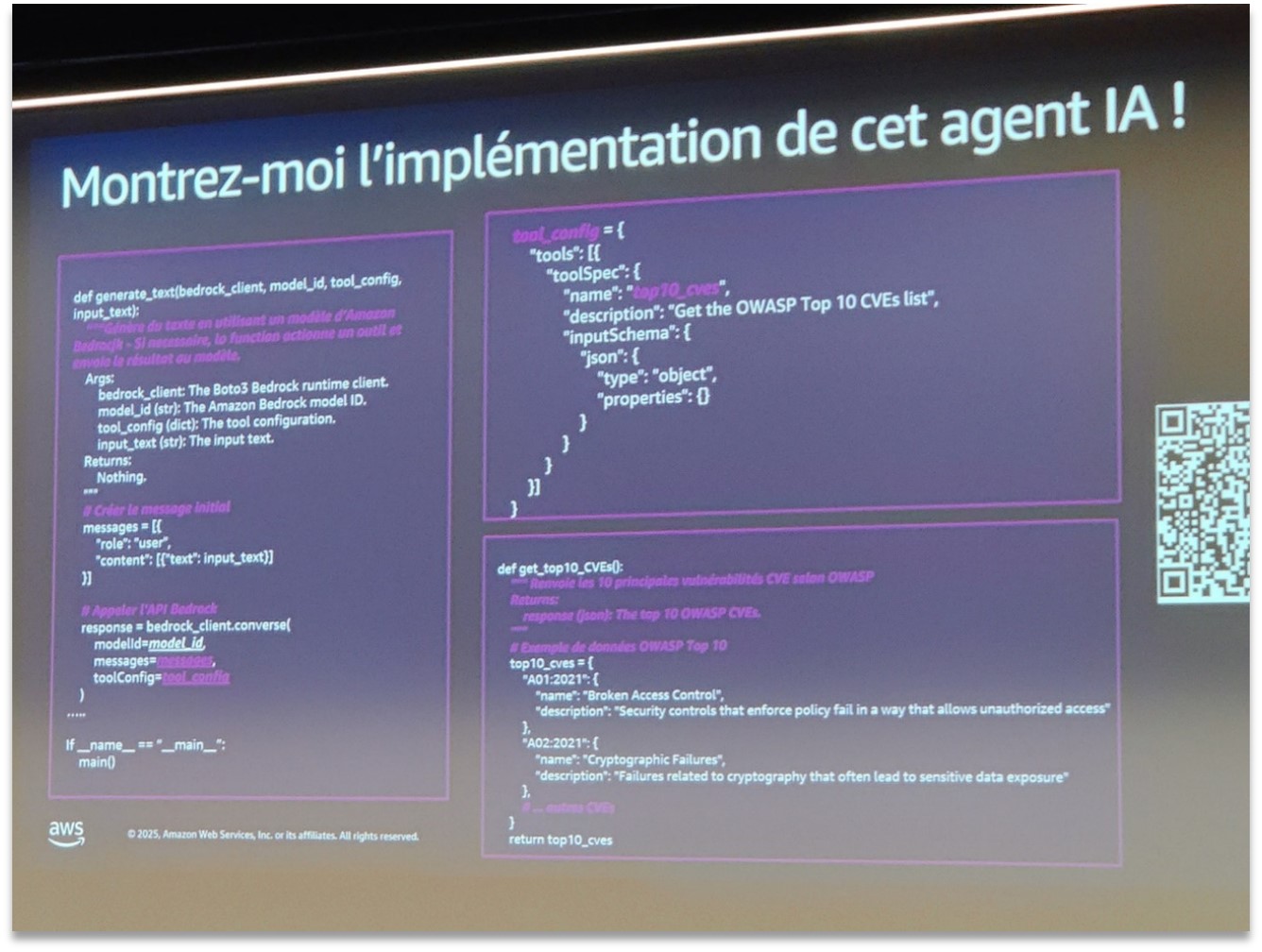

Python code snippet demonstrating the implementation of an AI security agent querying the OWASP Top 10 CVEs via Amazon Bedrock.

The code above illustrates how easy it is to implement a tooled LLM agent. In Python, we define a tooled function (get_top10_cves) to retrieve the list of the top 10 vulnerabilities (here, the OWASP Top 10). We then configure the Bedrock client to use this "tools" function, which the model can call when queried. The result: in about 30 lines of code, we have an assistant capable of providing a list of critical CVEs or detailing the most common vulnerabilities, based on a model hosted by Bedrock. This type of agent could be integrated into an internal chatbot, a code scanning tool, or any other security system to increase the productivity of technical teams.

Finally, the third pillar addresses new threats created by AI itself. The aim here is to protect against malicious uses of GenAI: hackers using LLMs to automate phishing or generate sophisticated malware, the mass dissemination of disinformation by AI bots, or indirect attacks such as model poisoning (pushing bad data to skew a model). On this front, AWS recommends active technology monitoring and the implementation of specific defenses. For example, solutions are beginning to emerge to detect synthetic content (deepfakes) or to test models via AI Red Teams. The goal is to keep pace: just as attackers are innovating with AI, defenders must integrate AI into their arsenal and adapt their security strategies. In short, security and AI must move forward hand in hand in the era of GenAI.

Platform Engineering: ever greater efficiency on a large scale

In addition to AI, the Summit also focused on Platform Engineering and new features that make life easier for infrastructure/DevOps teams. One topic in particular caught the attention of attendees: deploying EKS in "Auto" mode.

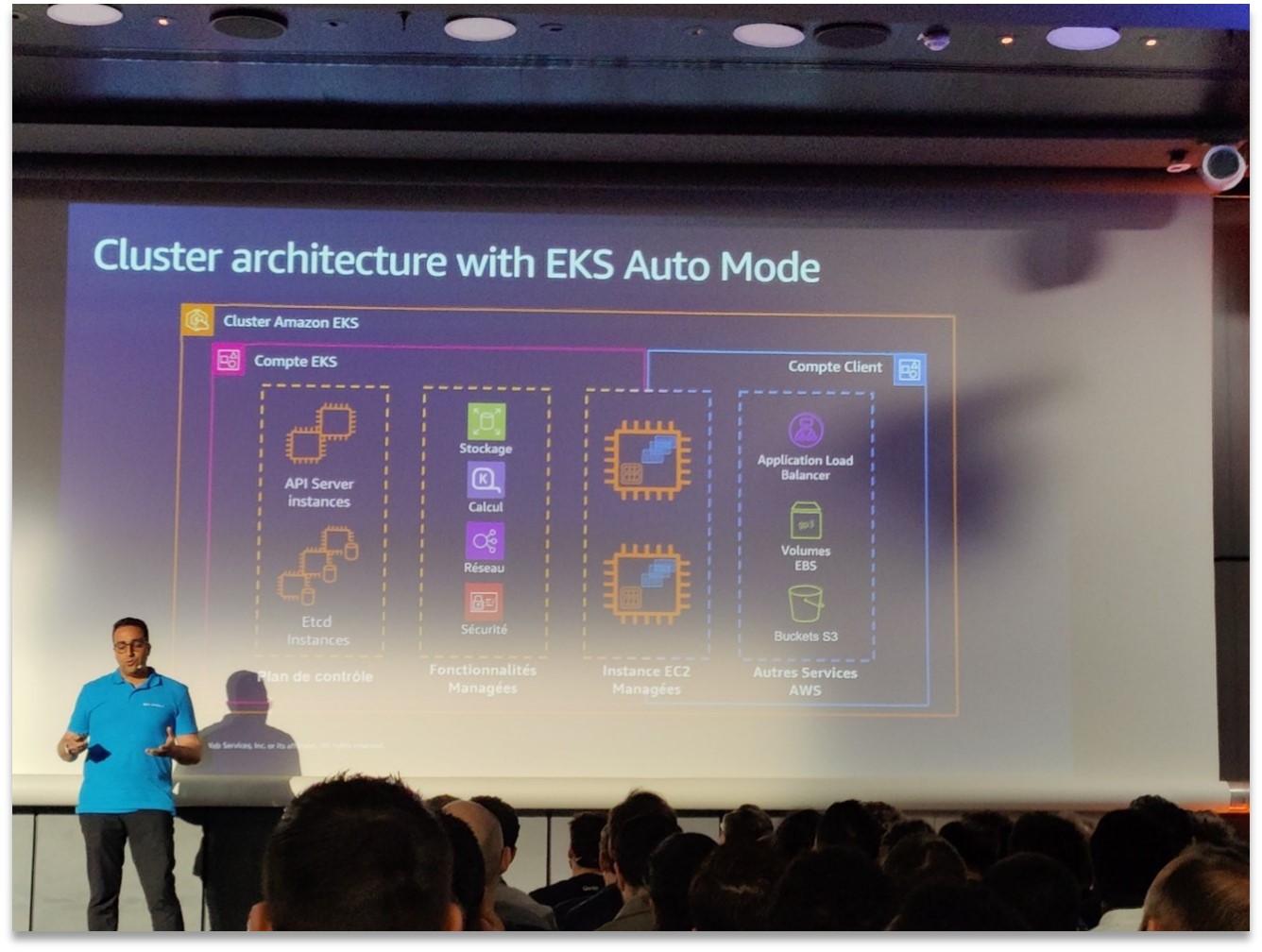

EKS Auto Mode cluster architecture with automated scaling, networking, and storage management by AWS.

EKS Auto Mode (recently announced by AWS) allows you to create even more autonomous managed Kubernetes clusters. In concrete terms, when this mode is enabled, AWS automatically takes care of node provisioning (via Karpenter), worker scaling based on pods, and part of the network and storage configuration. The diagram above shows the simplified architecture of an EKS Auto cluster: the control plane is still managed by AWS as usual, but now compute instances (EC2) and components such as load balancers, EBS volumes, and S3 buckets can be provisioned dynamically and controlled by the platform itself. For teams, this means less manual management of Kubernetes infrastructure and more time to focus on application workloads. During the session on this topic, we saw demos of clusters being created with just a few commands, without having to worry about instance type or capacity settings—ideal for dev/test environments, rapid POCs, or even production once basic requirements have been clearly defined.

From a Platform Engineering perspective, EKS Auto Mode is a major step forward because it democratizes access to well-managed K8s, even for teams that don't have full-time K8s experts. Of course, purists or those with specific needs can still customize (Auto Mode allows for network adjustments, choice of node types, etc.). But for many organizations, this managed offering will accelerate time-to-market by reducing the Ops load. This can be seen as the culmination of trends that began earlier: the open source Karpenter (the spot instance auto-scaler developed by AWS) paved the way for optimizing cluster costs and flexibility. In fact, Anthropic reported a 40% reduction in its AWS bill last year thanks to Karpenter. Today, AWS integrates these mechanisms natively into EKS Auto: there is no longer any need to install/configure Karpenter separately, as auto-provisioning is built-in. What we gain in simplicity, we lose (a little) in fine control.

Qonto's SRE team manages 15 EKS clusters (approx. 20,000 pods) with over 150 deployments per day, thanks to GitOps/ArgoCD and Karpenter auto-scaling. Their session at the Summit showed how platform updates, which used to take several days, have been reduced to just one hour thanks to these optimizations.

Furthermore, GitOps has established itself as an essential practice in platform engineering. Whether for deploying applications or managing EKS cluster configuration, many speakers mentioned the use of GitOps tools (Argo CD, FluxCD) to ensure traceability and reproducibility of changes. Combined with solutions such as EKS Auto Mode, GitOps enables a high level of automation while maintaining control via code. This paves the way for internal "self-service" platforms where developers and data scientists can deploy their applications (including ML pipelines or AI models) in just a few clicks, with the infrastructure being managed as code in the background.

Finally, the Platform Engineering movement as a whole is gaining momentum. AWS has communicated extensively on ways to industrialize cloud usage: Terraform or CDK blueprints for rapidly deploying complete platforms (integrating CI/CD, observability, etc.), the growing adoption of GitOps for everything (several sessions showed ArgoCD in action), and the importance of end-to-end automation. The underlying message was clear: to take full advantage of GenAI and new technologies, you need a solid, automated foundation. As a Tech Lead, this reinforces my belief that investing in a robust internal platform (Infrastructure as Code, pipelines, monitoring, integrated security) is not a luxury, but a prerequisite for fast and effective innovation.

AWS Graviton4: 30% better performance compared to Graviton3, 40% better performance for databases, and 45% better performance for Java applications.

In terms of infrastructure and optimization, AWS also presented its latest hardware advances. The new generation of in-house Graviton4 processors was unveiled, promising significant gains: +30% performance compared to Graviton3 in general, and even +40% on database loads and +45% on Java applications. For Ops teams, these hardware improvements will translate into lower costs (since they get more performance per dollar spent) and the ability to run intensive workloads (big data, ML model training, etc.) with increased efficiency. For the DevOps community, this is a reminder that optimization also involves choosing the right infrastructure: taking advantage of the latest AWS offerings (Graviton instances, GPU-optimized, I/O-optimized storage, etc.) is an integral part of the operational excellence approach.

From ideation to production: best practices for generative AI

Let's conclude this feedback session with a cross-cutting issue that struck me: how do we move from experimentation to production in generative AI projects? Many speakers emphasized best practices for making this transition successfully.

The life cycle of a GenAI project was dissected in the keynote speech using the concept of "AI Foundations." Upstream, it begins with data readiness. There can be no high-performance model without high-quality data: several speakers (including Safran and Owkin) emphasized the importance of modernizing and centralizing enterprise data before diving into deep learning. In this regard, services such as AWS Glue, Lake Formation, and the integration of Apache Iceberg with S3 (announced at the Summit) help to create governed, versioned data lakes that are ready to feed models. I noted, for example, that Owkin has set up MLOps pipelines on AWS to aggregate multi-source medical data while ensuring anonymization, proving that it is possible to reconcile data richness and compliance.

Once the model has been chosen, the question of fine-tuning has been widely discussed. The trend is toward parsimony: fine-tuning is only done when necessary. Many use cases can already be covered by using careful prompt engineering on a generic model, or via embedding + RAG (Retrieval Augmented Generation) techniques to provide business knowledge without altering the model. Fine-tuning is used to provide real added value (specific tone, business jargon, performance on a specific task) and must be done in compliance with security rules (no data leaks in the training set, use of feature stores to track what was used for training, etc.). I also noted that AWS offers tools to facilitate this fine-tuning by keeping an eye on model drift and allowing the fine-tuned model to be distilled if necessary (to create a lighter version that can be used in production on standard hardware).

In summary, the best production practices for generative AI highlighted at the Summit can be summarized in a few points: well-prepared and governed data, careful selection of models (and their orchestration), architecture integrated with the rest of the application ecosystem, and security "by design" (safeguards, monitoring, access control) throughout the pipeline. This may seem like a lot of requirements, but it is the price that must be paid for generative AI to deliver value in the field. As a tech lead, this advice resonates strongly with my own experience: the devil is in the details (a model that shines in demo may fail in production due to a lack of up-to-date data or adequate ops), hence the importance of a full-stack approach encompassing both model and infrastructure.

Last but not least, let's not forget about training and culture. The Summit showed that the technology is ready (or actively preparing) for GenAI, but its successful adoption also depends on the acculturation of teams. It is important to train developers in new practices (prompt engineering, use of AI APIs, MLOps), to raise awareness among security teams about the specificities of LLMs, and to involve business units in identifying the right use cases.

A promising future, unchanged fundamentals

The AWS Summit Paris 2025 exceeded my expectations as a tech enthusiast. I left feeling both inspired by the new developments and reassured in my convictions. Yes, generative AI is now mature enough for concrete, large-scale applications, and AWS provides a robust ecosystem (dedicated chips, varied models, deployment tools) to take advantage of it. Yes, the multi-agent approach looks set to be the next step, opening up unprecedented opportunities that we will explore with passion, while keeping in mind the orchestration and security challenges that this poses. And on the subject of security, I welcome the clear-sighted reminder that without trust and governance, no innovation can be sustainable—it is up to us to implement these safeguards in our projects right now.

Between the lines, this Summit reminds us that DevOps/DevSecOps fundamentals remain more relevant than ever. Whether deploying an LLM-driven chatbot or a more traditional cloud application, the recipes that work are the same: an automated, scalable, and observable platform, smooth CI/CD pipelines, and a culture of continuous improvement and knowledge sharing. The tools are evolving (Kubernetes is becoming self-driving, AI is everywhere), but our mission as engineers remains to maintain control—technical, operational, and ethical—over these powerful technologies.

For Squad and our tech community, the adventure continues with a clear direction. This feedback from the AWS Summit 2025 is not an end in itself, but an inspiring milestone. It's up to us to build on this momentum by applying these insights to our upcoming DevSecOps and MLSecOps projects, sharing our findings in turn, and always maintaining that balance between enthusiasm for innovation and the need for reliability. As I like to say, "Innovating is good... innovating safely and robustly is better!"